By 苏剑林 | July 02, 2015

Latest Update: "Efficient Implementation of the Apriori Algorithm with Numpy"

Recently, while working on data mining, I came across the Apriori algorithm. Since I hadn't specialized in this field before, I wasn't familiar with it, but now that it's come up in my work, I had to study it seriously. The Apriori algorithm is used for finding association rules—essentially discovering potential logic from a large dataset. For example, "Condition A + Condition B" is very likely to lead to "Condition C" ($A + B \to C$); this is an association rule. Specifically, for instance, after a customer buys product A, they often buy product B (conversely, buying B doesn't necessarily mean they buy A). Or more complexly, customers who buy both A and B are likely to buy C (again, the reverse is not necessarily true). With this information, we can bundle certain products to achieve higher revenue. The algorithms used to seek these association rules are called association analysis algorithms.

Beer and Diapers

Beer and Diapers

In the world of association algorithms, the most frequently cited case is probably "Beer and Diapers." The story originated in the 1990s at Walmart supermarkets in the United States. Managers discovered that two seemingly unrelated items, beer and diapers, frequently appeared in the same shopping basket. After analysis, they found that in American households with infants, the mother usually stayed home to look after the baby while the young father went to the supermarket to buy diapers. While buying diapers, the father often picked up some beer for himself, resulting in these two unrelated items frequently appearing together. Consequently, Walmart tried placing beer and diapers in the same area so fathers could find both easily. The results were quite successful!

(Note: The authenticity of this story is often questioned, but regardless, it has become a famous and simple case for introducing association analysis.)

The Apriori Algorithm

How do we find association rules like "Beer and Diapers" from a massive set of purchase records? There are many association analysis algorithms, and the simplest is likely the Apriori algorithm (though it isn't the most efficient, it's excellent as an introductory algorithm). Detailed descriptions of the Apriori algorithm are not provided in this article because there is already a wealth of information online. Recommended reading:

https://en.wikipedia.org/wiki/Association_rule_learning

http://hackerxu.com/2014/10/18/apriori.html

Python Implementation

After several days of debugging, I finally implemented a relatively efficient Apriori script using Python. Here, "efficient" refers to the implementation of the Apriori algorithm itself, without involving fundamental improvements to the algorithm logic. The script utilizes the Pandas library to ensure performance while minimizing the amount of code. Readers will find that this code is shorter and more efficient than many Apriori implementations found online (not limited to Python).

The code is compatible with both Python 2.x and 3.x, provided Pandas is installed. This code can generally handle problems involving tens of thousands of records and dozens of candidate items, provided you have a little patience.

Efficiency Issues

The execution time of the Apriori algorithm depends on many factors, such as the volume of data, the minimum support (though it has little to do with minimum confidence), and the number of candidate items. Using market basket analysis as an example: first, the execution time naturally depends on the number of records $N$, though the relationship with $N$ is only linear. Second, the minimum support is almost decisive; it significantly impacts execution time, though its exact effect depends on the specific problem; furthermore, it largely determines the number of rules eventually generated. Finally, the number of candidate items $k$ (the total number of unique products appearing across all records) is also critical. If $k$ is large, the subsequent joins will result in the number of items scaling as $k^2, k^3, \dots$ (approximately), which has a fatal impact on speed.

Therefore, while the logic of the Apriori algorithm is simple, its efficiency is not high.

Code

#-*- coding: utf-8 -*-

from __future__ import print_function

import pandas as pd

# Custom string connection function for merging itemsets

def connect_string(x, ms):

x = list(map(lambda i:sorted(i.split(ms)), x))

l = len(x[0])

r = []

for i in range(len(x)):

for j in range(i+1, len(x)):

if x[i][:l-1] == x[j][:l-1] and x[i][l-1] != x[j][l-1]:

r.append(ms.join(sorted(list(set(x[i]) | set(x[j])))))

return r

# Main function to find association rules

def find_rule(d, support, confidence, ms = u'--'):

result = pd.DataFrame(index=['support', 'confidence']) # Outcome matrix

support_series = 1.0 * d.sum() / len(d) # Support for 1-itemsets

column = list(support_series[support_series > support].index) # Preliminary filtering

k = 0

while len(column) > 1:

k = k + 1

print(u'\nSearching for %s-itemsets...' % k)

column = connect_string(column, ms)

print(u'Number of candidate sets: %s' % len(column))

sf = lambda i: d[i.split(ms)].prod(axis=1, numeric_only=True) # New support function

# This part counts mentions. Since it uses matrix multiplication, it's quite fast.

d_2 = d[column[0].split(ms)].prod(axis=1, numeric_only=True)

d_2 = pd.DataFrame(d_2, columns=[column[0]])

for i in range(1, len(column)):

d_2[column[i]] = d[column[i].split(ms)].prod(axis=1, numeric_only=True)

support_series_2 = 1.0 * d_2[column].sum() / len(d) # Calculate support

column = list(support_series_2[support_series_2 > support].index) # Filter by support

support_series = support_series.append(support_series_2)

column2 = []

for i in column: # Construct rules

i = i.split(ms)

for j in range(len(i)):

column2.append([ms.join(i[:j] + i[j+1:]), i[j]])

for i, j in column2: # Calculate confidence

conf = support_series[ms.join(sorted([i, j] if isinstance(j, list) else i.split(ms) + [j]))] / support_series[i]

if conf > confidence:

result[i + '-->' + j] = [support_series[ms.join(sorted(i.split(ms) + [j]))], conf]

result = result.T.sort_values(['confidence','support'], ascending = False) # Sort results

print(u'\nResults:')

print(result)

return result

# Usage example:

# d = pd.read_csv('apriori.txt', header=None, dtype=object)

# d = pd.get_dummies(d.unstack()).sum(level=1) # Convert to 0-1 matrix

# find_rule(d, 0.01, 0.5)

Test dataset: apriori.txt

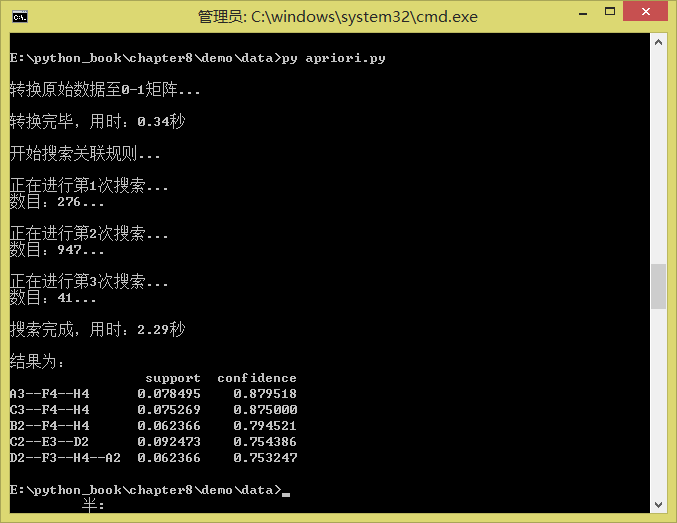

Execution result:

apriori

If you found this article helpful, you are welcome to share it or provide a donation. Donations are not for profit, but rather to see how much genuine attention Scientific Spaces has gained from its readers. Of course, if you choose to ignore it, it will not affect your reading experience. Thank you again!