Official GPT2 Block Diagram

By 苏剑林 | March 16, 2020

A while ago, I noticed that an expert open-sourced a Chinese GPT2 model. It is the largest version with 1.5 billion parameters. Looking at the demo provided by the author, the generation effect is quite impressive. I thought about loading it into my bert4keras to play with it. However, the overall architecture of early bert4keras was written quite "rigidly," making it very inconvenient to integrate multiple different models. Two weeks ago, I finally couldn't stand it anymore and rewrote the overall structure of bert4keras. Now, bert4keras can be considered relatively flexible for writing various Transformer-based models, such as GPT2 and T5, which have already been integrated.

GPT is something many readers have likely heard of. Simply put, it is a language model based on the Transformer structure, originating from the paper "GPT: Improving Language Understanding by Generative Pre-Training". However, it wasn't created just to be a language model; it uses the language model to pre-train itself and then fine-tunes on downstream tasks to improve performance. It is a pioneer of the "Transformer + Pre-training + Fine-tuning" paradigm. In comparison, BERT could be considered its "junior." GPT2, on the other hand, is the upgraded version of GPT—larger model, more training data—with the largest version reaching 1.5 billion parameters.

Most readers who have seen promotional articles for GPT2 have been amazed by its generation capabilities. However, no matter how good it is, it's someone else's language; OpenAI did not help train a Chinese version. But the good news is that a project called GPT2_ML has open-sourced a Chinese version of GPT2, and it is the largest 1.5 billion parameter scale model.

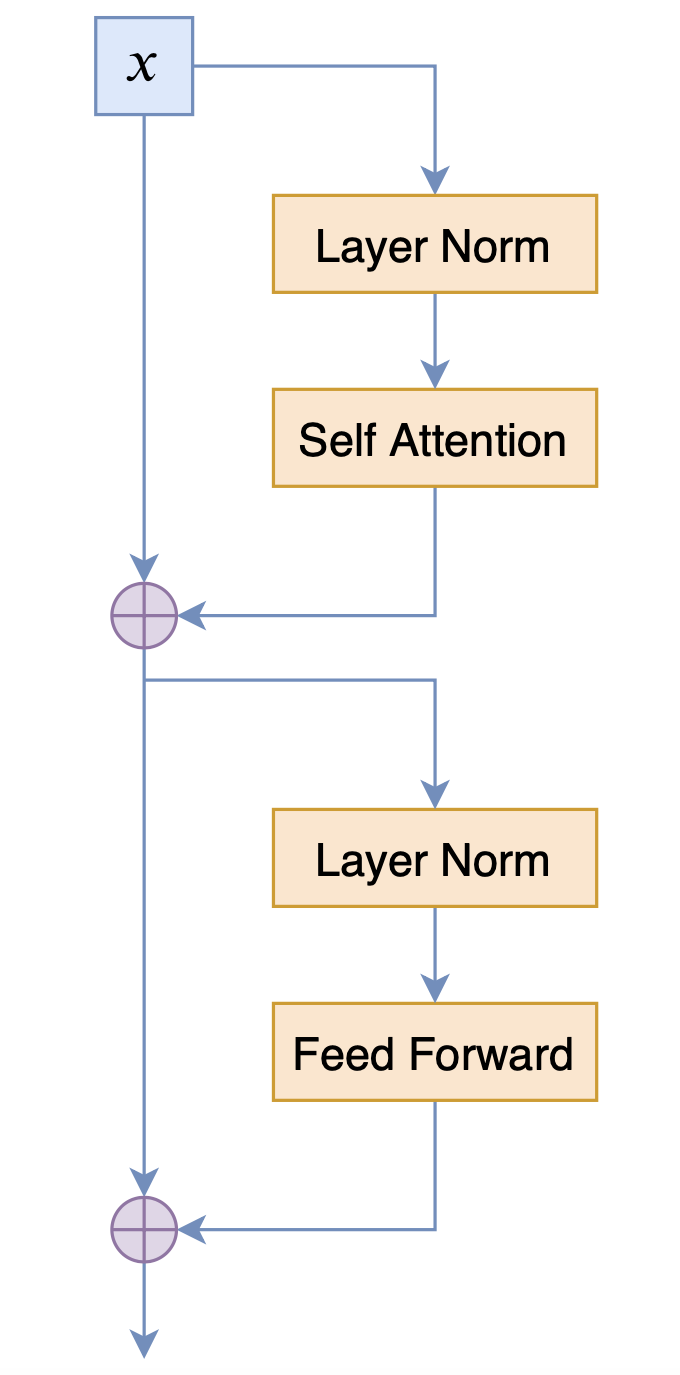

Currently, the GPT2 integrated into bert4keras is precisely the one provided by the GPT2_ML project, not the OpenAI version, as bert4keras prioritizes Chinese language services. It is worth noting that the model structure of GPT2_ML is not the same as the OpenAI version of GPT2, nor is it the same as the BERT structure. The comparison of the three blocks is as follows:

First, download the model weights at the following address:

Link: https://pan.baidu.com/s/1OXBd16o82SpIzu57kwA8Mg

Extraction code: q79rThe main file "model.ckpt-100000.data-00000-of-00001" can also be downloaded from Google Drive. After downloading, please check the SHA256 of model.ckpt-100000.data-00000-of-00001 (4a6e5124df8db7ac2bdd902e6191b807a6983a7f5d09fb10ce011f9a073b183e).

Then install bert4keras version no lower than 0.6.0 (the current latest version), and you can run the following test code (if the code below has expired due to updates, please check the latest version at basic_language_model_gpt2_ml.py):

#! -*- coding: utf-8 -*-

# Basic test: Chinese GPT2 model

# Introduction link: https://kexue.fm/archives/7292

import numpy as np

from bert4keras.models import build_transformer_model

from bert4keras.tokenizers import Tokenizer

from bert4keras.snippets import AutoRegressiveDecoder

from bert4keras.snippets import uniout

config_path = '/root/gpt2/config.json'

checkpoint_path = '/root/gpt2/model.ckpt-100000'

dict_path = '/root/gpt2/vocab.txt'

# Establish tokenizer

tokenizer = Tokenizer(dict_path, token_start=None, token_end=None, do_lower_case=True)

# Build model and load weights

model = build_transformer_model(config_path=config_path, checkpoint_path=checkpoint_path, model='gpt2_ml')

class ArticleCompletion(AutoRegressiveDecoder):

"""Article continuation based on random sampling

"""

@AutoRegressiveDecoder.set_rtype('probas')

def predict(self, inputs, output_ids, step):

token_ids = np.concatenate([inputs[0], output_ids], 1)

return model.predict(token_ids)[:, -1]

def generate(self, text, n=1, topk=5):

token_ids, _ = tokenizer.encode(text)

results = self.random_sample([token_ids], n, topk) # Based on random sampling

return [text + tokenizer.decode(ids) for ids in results]

article_completion = ArticleCompletion(start_id=None, end_id=511, # 511 is the Chinese period

maxlen=256, minlen=128)

print(article_completion.generate(u'今天天气不错'))

Partial results:

>>> article_completion.generate(u'今天天气不错')

[u'今天天气不错,可以去跑步。昨晚看了一个关于跑步的纪录片,里面的女主讲述的是一个女孩子的成长,很励志,也很美丽。我也想跑,但是我不知道跑步要穿运动鞋,所以就买了一双运动鞋。这个纪录片是关于运动鞋的,有一集讲了一个女孩子,从小学开始就没有穿过运动鞋,到了高中才开始尝试跑步。']

(Translation: The weather is quite good today, good for a run. Last night I watched a documentary about running. The female lead talked about a girl's growth, which was very inspiring and beautiful. I also want to run, but I didn't know you needed sneakers to run, so I bought a pair. This documentary is about sneakers. One episode talked about a girl who hadn't worn sneakers since elementary school and only started trying to run in high school.)>>> article_completion.generate(u'双十一')

[u'双十一马上就要到了!你还在为双11的物流配送而担心吗?你还在为没时间去仓库取货而发愁吗?你还在为不知道怎么买到便宜货而发愁吗?你还在为买不到心仪的产品而懊恼吗?那么,双十一就来了!今天小编带你来看看这些快递,都是怎么送货的!1. 物流配送快递公司的配送,主要是由快递公司负责,快递公司负责派件,物流服务。']

(Translation: Double Eleven is almost here! Are you still worried about the logistics delivery for Double 11? Are you still worried about not having time to pick up goods from the warehouse? Are you still worried about not knowing how to buy cheap goods? Are you still annoyed that you can't buy your favorite products? Well, Double Eleven is coming! Today, the editor will take you to see how these deliveries are made! 1. Logistics delivery. The delivery of express companies is mainly handled by the express company, which is responsible for dispatching items and logistics services.)>>> article_completion.generate(u'科学空间')

[u'科学空间站科学空间站(英文:science space station),是中华人民共和国的一个空间站。该空间站是中国科学院大连物理研究所研制,主要研发和使用中国科学院大连物理研究所的核能动力空间站。科学空间站位于北京市海淀区,距离地面393米,总建筑面积约为1万平方米,总投资约为5亿元人民币。科学空间站于2018年12月26日开始动工,2021年6月建成并投入使用。']

(Translation: Scientific Space Station (English: science space station) is a space station of the People's Republic of China. This space station was developed by the Dalian Institute of Chemical Physics, Chinese Academy of Sciences (CAS), mainly for researching and using the nuclear-powered space station of the Dalian Institute of Chemical Physics, CAS. The Scientific Space Station is located in Haidian District, Beijing, 393 meters above the ground, with a total construction area of about 10,000 square meters and a total investment of about 500 million RMB. Construction of the Scientific Space Station began on December 26, 2018, and it was completed and put into use in June 2021.)

Doesn't it feel quite good?

Seeing this effect, many readers' first thought is probably: how can I use it for my own model? Can I fine-tune it on my own tasks?

Unfortunately, I have to give you a somewhat pessimistic result: I tested fine-tuning this GPT2, which has nearly 1.5 billion parameters, using the Adam optimizer on a TITAN RTX with 22G of VRAM. I found that it couldn't even run with a batch_size=1... Finally, I discovered that it could only be fine-tuned using the AdaFactor optimizer.

Regarding AdaFactor, I will have the opportunity to write an article discussing it later.

Reproduction must include the original address of this article: https://kexue.fm/archives/7292