By 苏剑林 | October 09, 2021

In the article "You Might Not Need BERT-flow: A Linear Transformation Comparable to BERT-flow", inspired by BERT-flow, I proposed an alternative solution called BERT-whitening. It is simpler than BERT-flow and achieves comparable or even better results on most datasets. Furthermore, it can be used for dimensionality reduction of sentence vectors to improve retrieval speed. Later, together with several collaborators, I supplemented the experiments for BERT-whitening and wrote it as an English paper, "Whitening Sentence Representations for Better Semantics and Faster Retrieval", which was posted on ArXiv on March 29 this year.

However, about a week later, a paper titled "WhiteningBERT: An Easy Unsupervised Sentence Embedding Approach" (hereafter referred to as WhiteningBERT) appeared on ArXiv. Its content heavily overlaps with BERT-whitening. Some readers noticed this and gave me feedback, suggesting that WhiteningBERT might have plagiarized BERT-whitening. This post is to report to concerned readers the results of my communication with the authors of WhiteningBERT.

Timeline

First, let's review the relevant timeline for BERT-whitening to help everyone understand the sequence of events:

January 11, 2021: The article "You Might Not Need BERT-flow: A Linear Transformation Comparable to BERT-flow" was published on this blog, proposing BERT-whitening for the first time. At this stage, the article did not yet include the dimensionality reduction content.

January 19, 2021: The BERT-whitening blog was shared to the WeChat official account "夕小瑶的卖萌屋" (link). After being published on both the blog and the official account, I assumed BERT-whitening had spread quite widely, at least within the domestic NLP circle.

January 20, 2021: Liu, a researcher from Tencent, pointed out to me that BERT-whitening is essentially PCA, so it could also be used for dimensionality reduction. After testing, the dimensionality-reduced sentence vectors even showed improvements in some tasks. It was both fast and effective, so I updated this content into the blog.

January 23, 2021: Sensing that BERT-whitening had academic value, I invited Liu and Cao to supplement experiments and plan an English paper to submit to ACL 2021. At that time, there was only about a week left before the submission deadline.

February 02, 2021: Fortunately, we finished the experiments and the paper, and submitted it before the ACL 2021 deadline.

March 26, 2021: The review results for ACL 2021 came out. We didn't feel optimistic, so we skipped the rebuttal and planned to put the paper directly on ArXiv.

March 29, 2021: The English paper for BERT-whitening, "Whitening Sentence Representations for Better Semantics and Faster Retrieval", was posted on ArXiv.

April 05, 2021: The paper "WhiteningBERT: An Easy Unsupervised Sentence Embedding Approach" appeared on ArXiv.

September 26, 2021: The EMNLP 2021 Accepted Papers list was announced, confirming that WhiteningBERT was accepted for EMNLP 2021.

Readers might wonder why, after six months since April 5, I am only bringing this up now. First, because the BERT-whitening method is relatively simple, I did not rule out the possibility that others independently achieved the same results. Therefore, when WhiteningBERT first appeared on ArXiv, I didn't pay it much mind. Second, even in the worst-case scenario where WhiteningBERT copied BERT-whitening, it was initially just an ArXiv post, which isn't a huge deal. There was no need to waste time on it.

However, once I learned that WhiteningBERT was accepted by EMNLP 2021, the nature of the matter changed from "minor" to significant. I decided to try communicating with the authors of WhiteningBERT, hoping they could prove the originality of their work to avoid unnecessary misunderstandings. Below is our communication process.

Email Communication

On September 26, I sent the first email to the authors of WhiteningBERT:

Dear Authors,

First, congratulations on your work "WhiteningBERT: An Easy Unsupervised Sentence Embedding Approach" being accepted to EMNLP 2021.

However, I noticed that your work is almost identical in methodology to my blog post published on January 11, 2021 (https://kexue.fm/archives/8069). Even the final naming of the method is almost identical. Therefore, I have reason to question the originality of your work's methodology.

As such, I believe it is necessary for you to provide evidence that your work was indeed independent and original (e.g., manuscript edit records proving the work started before January 11). If not, I request that you withdraw the paper from EMNLP and issue a public apology. If there is no response to these two points, I will have no choice but to initiate a public discussion online.

Looking forward to your reply.

At the time, I was quite emotional after hearing the news, so the wording was not very friendly. Please excuse me. Shortly thereafter on the same day, the first author of WhiteningBERT replied to me:

Hello,

We have received your letter. Thank you for your interest in our work!

First, we believe that our work (submitted to ArXiv on April 5, 2021) and your "Whitening Sentence Representations for Better Semantics and Faster Retrieval" (submitted to ArXiv on March 29, 2021) belong to contemporary works. While the two papers have similarities, the points they want to declare and the stories they tell are not almost identical. This was mentioned and cited in our paper.

Second, regarding research on unsupervised sentence representation, we have been working on it since last year. We hoped to obtain unsupervised sentence representations based on existing pre-trained models and explored several methods including inter-layer combinations, data augmentation, introducing graph structures, linear transformations, pre-training, and knowledge transfer. We experimented on several sentence semantic similarity tasks; some methods that didn't work were left out, and finally, we summarized three simple and useful conclusions into this experimental paper. As for the final name WhiteningBERT, we chose it because one of our methods uses PCA Whitening. While the name might seem like clickbait, we settled on it during the writing process for ease of reference. (We originally called it MatchingBERT; the image below shows that the last modification time of some of these files was in July 2020.)

Third, regarding the originality of the method, we never claimed that the PCA Whitening algorithm was our invention. In fact, the three methods in our conclusions are all very simple, and many papers and tutorials have introduced whitening methods. Therefore, we admit that the novelty is limited.

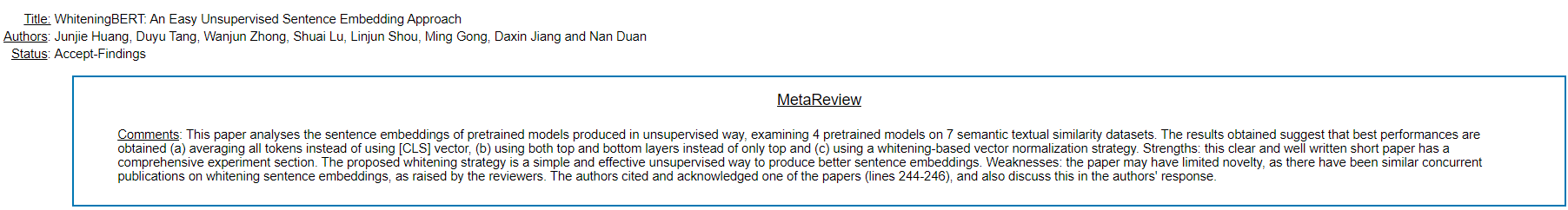

Finally, regarding the content similarity you mentioned (including your blog) and the lack of innovation, these were already raised and discussed by reviewers during the EMNLP 2021 review process. The PCs and finally the SPCs were aware of the whole situation. Nevertheless, they still decided to accept it; I believe the PCs felt our work still provides value.

Best regards,

The reply included two screenshots:

Snapshot 1: MatchingBERT project timestamp

Snapshot 2: Meta review snapshot

At this point, I was quite appreciative that the first author was willing to communicate actively on this issue. However, their reply did not resolve my doubts, so I replied on the same day:

Hello,

Thank you for your reply. However, what I am questioning is not your innovation, but your originality.

1. I am aware that Microsoft has many people dedicated to research in various NLP tasks, but this doesn't invalidate my concerns.

2. Snapshot 1 only serves as very weak evidence that you were working on something called "MatchingBERT" early on, but I cannot confirm the actual content of that work.

3. Snapshot 2 similarly does not negate my concerns.

Regarding "the PCs and SPCs were aware of the whole situation," do you mean that knowing "a Chinese blog introduced the same method more than two months before WhiteningBERT was submitted to ArXiv, and an English paper introduced the same method a week before it was submitted," the PCs and SPCs still did not question your originality and chose to accept it?

These exchanges occurred on September 26. Since then, until October 5, I did not receive any response from any of the WhiteningBERT authors. So, I emailed all the authors again:

Dear Authors, I apologize for interrupting your National Day holiday.

After I raised my concerns, the first author replied promptly, and I replied immediately as well (logs attached). But since my last reply, I haven't received any follow-up from any author. In the spirit of science, I don't want to cause any misunderstandings and hope to further clarify the situation. I am making this inquiry to confirm if you have decided not to respond further.

The first author replied quickly:

Hello,

We have currently asked our company's legal department to conduct an evaluation, and the legal department will provide a response. Since it is currently the National Day holiday, we hope you will understand!

Best regards,

Personal Views

To be honest, receiving this email from the first author left me with mixed feelings—shocked, confused, and somewhat speechless. Initially, I wasn't certain of the nature of the situation, so I chose to inquire via email first to avoid later misunderstandings and embarrassment. If the authors could show the independence of WhiteningBERT's proposal, that would be a happy ending for everyone, providing an explanation to the readers and myself. Instead, the authors avoided a direct response to the issue and turned to consult a legal department. What kind of move is that?

As I mentioned in the timeline, when we decided to organize BERT-whitening into a paper for ACL 2021, we had less than two weeks before the deadline. In that time, we finished the experiments and wrote the paper (although my English isn't great). If WhiteningBERT was truly proposed earlier than BERT-whitening, then with such a strong lineup of authors, surely they could have completed the experiments and paper much sooner? Or at the very least, after the BERT-whitening blog was released, they could have posted their paper to ArXiv to demonstrate their originality. How could it be such a coincidence that they waited until the BERT-whitening English paper hit ArXiv to release their own?

Of course, even with these doubts, we still cannot definitively characterize this event. The reason is simple: BERT-whitening is too simple, and the possibility of independent replication cannot be ruled out. This is exactly why I initiated the email communication. So, we return to the authors' "mysterious operation": what was the thinking behind involving the legal department?

In fact, this whole affair is difficult to "prove" as plagiarism with substantial evidence. Even if the authors of WhiteningBERT were to not respond at all, there would be no legal risk. My hope for them to provide some form of proof was purely a moral appeal, not an attempt to "bring anyone to justice." This is a scientific problem, not a PR problem. Therefore, even if the legal department can eliminate law-related risks for the authors, if they remain unwilling to provide substantive proof, how can they eliminate the doubts in the minds of the readers and myself?