By 苏剑林 | June 03, 2019

Background: A few months ago, Baidu held the "2019 Language and Intelligence Technology Competition," which consisted of three tracks. I was quite interested in the "Information Extraction" track, so I signed up. After two months of intense work, the competition has finally concluded, and the final results have been announced. Starting from a total lack of knowledge about Information Extraction, I explored some experiences in supervised learning for information extraction through study and research during this competition, which I would like to share here.

I ranked 7th on the final test set, with an F1 score of 0.8807 (Precision 0.8939, Recall 0.8679), about 0.01 behind the first place. From a competition perspective, this result is not exceptionally outstanding, but I believe the model has several innovative features, such as a self-designed extraction structure, CNN+Attention (making it fast enough), and the absence of pre-trained models like BERT. I believe this has certain reference value for both academic research and engineering applications of information extraction.

Information Extraction (IE) is a text processing technology that extracts factual information such as entities, attributes, relations, and events from natural language text. It is an important foundation for AI applications such as information retrieval, intelligent question answering, and intelligent dialogue, and has long received extensive attention from the industry. ... This competition will provide the industry's largest schema-based Chinese information extraction dataset (SKE), aiming to provide an academic exchange platform for researchers, further enhance the research level of Chinese information extraction technology, and promote the development of related AI applications.

—— From the official competition website

The information extraction task this time is, more accurately, a "triplet" extraction task. Sample data is as follows:

{

"text": "Jiuxuanzhu is a novel serialized on the Zongheng Chinese website, authored by Longma",

"spo_list": [

["Jiuxuanzhu", "Serialization Website", "Zongheng Chinese Website"],

["Jiuxuanzhu", "Author", "Longma"]

]

}

The goal is to input a sentence and output all triplets contained in that sentence. A triplet is in the form of $(s, p, o)$, where $s$ is the subject (main entity), a fragment of the query; $o$ is the object (target entity), also a fragment of the query; and $p$ is the predicate, the relationship between the two entities. The competition pre-defined all candidate predicates (schema, a total of 50 candidates). In general, $(s, p, o)$ can be understood as "$s$'s $p$ is $o$".

Baidu provided nearly 200,000 labeled data points with high annotation quality. (Please do not ask me for data; I am not responsible for sharing the dataset. It is said the dataset will be publicly released later at http://ai.baidu.com/broad/download.)

Clearly, this is a "one-to-many" extraction + classification task. Through manual observation of the samples, the characteristics are as follows:

1. $s$ and $o$ are not necessarily words identified by word segmentation tools. Therefore, the query must be labeled at the character level to extract the correct $s$ and $o$. Considering that word segmentation might cut boundaries incorrectly, character-based input should be used for labeling;

2. In most samples, the extraction result is in the form of "one $s$, multiple $(p, o)$". For example, "The stars of 'Wolf Warrior' include Wu Jing and Yu Nan," then extract "(Wolf Warrior, Starring, Wu Jing)" and "(Wolf Warrior, Starring, Yu Nan)";

3. Samples with "multiple $s$, one $(p, o)$" or even "multiple $s$, multiple $(p, o)$" also account for a certain proportion. For example, "The stars of 'Wolf Warrior' and 'Wolf Warrior 2' are both Wu Jing," then extract "(Wolf Warrior, Starring, Wu Jing)" and "(Wolf Warrior 2, Starring, Wu Jing)";

4. The same pair $(s, o)$ might correspond to multiple $p$. For example, "Wu Jing is both the star and director of 'Wolf Warrior'," then extract "(Wolf Warrior, Starring, Wu Jing)" and "(Wolf Warrior, Director, Wu Jing)";

5. In extreme cases, $s$ and $o$ might overlap. For example, "'Autobiography of Lu Xun' was published by Jiangsu Literature and Art Publishing House." Strictly speaking, besides extracting "(Autobiography of Lu Xun, Publisher, Jiangsu Literature and Art Publishing House)", one should also extract "(Autobiography of Lu Xun, Author, Lu Xun)".

In the "Sample Characteristics" section, we listed 5 basic observations. Except for the 5th point, which is somewhat extreme, the other 4 points are common characteristics of information extraction tasks. Before starting, I briefly surveyed current mainstream information extraction models and found that no single model could handle all these 5 characteristics well. Therefore, I abandoned existing extraction approaches and designed a new extraction scheme based on probabilistic graph ideas, utilizing CNN+Attention for efficiency.

For example, a baseline approach is to first perform entity recognition and then classify relations between the recognized entities. However, this approach does not handle cases where the same $(s, o)$ corresponds to multiple $p$ well, and suffers from sampling efficiency issues. Another approach is to treat it as a holistic sequence labeling task, referring to the paper "Joint Extraction of Entities and Relations Based on a Novel Tagging Scheme". However, this design doesn't handle multiple $s$ and multiple $o$ effectively, requiring an ugly "proximity principle." There are also Reinforcement Learning methods... but without exception, none of these methods solve the problem of overlapping $s$ and $o$.

It seems incredible to me that these basic problems in Information Extraction haven't been resolved after so many years. My principle is: discard inelegant designs. So, I decided to abandon all extraction ideas I knew and design a new scheme. For this, I considered a probabilistic graph approach similar to Seq2Seq.

Anyone who has worked with Seq2Seq knows that the decoder actually models: \begin{equation}P(y_1,y_2,\dots,y_n|x)=P(y_1|x)P(y_2|x,y_1)\dots P(y_n|x,y_1,y_2,\dots,y_{n-1})\end{equation} During prediction, it first predicts the first word via $x$, then predicts the second word assuming the first is known, and so on, until an end token appears. Why not apply this logic to triplet extraction? We consider: \begin{equation}P(s, p, o) = P(s) P(o|s)P(p|s,o)\end{equation} That is, we can first predict $s$, then pass $s$ in to predict the corresponding $o$ for that $s$, and then pass $s$ and $o$ in to predict relation $p$. In practice, the prediction of $o$ and $p$ can be combined into one step. So the total process takes only two steps: first predict $s$, then pass $s$ in to predict all corresponding $o$ and $p$.

Theoretically, the above model only extracts a single triplet. To handle cases with multiple $s$, multiple $o$, or even multiple $p$, we use a "semi-pointer-semi-annotation" structure (essentially replacing Softmax with Sigmoid, also introduced in "DGCNN: A CNN-based Reading Comprehension QA Model"). We also use Sigmoid instead of Softmax for relation classification.

With this design, the final model can decode very simply and efficiently, completely covering the 5 characteristics listed in "Sample Characteristics."

Note 1:

Why not predict $o$ first and then $s$ and its corresponding $p$?

This is because in the second step, we need to sample and pass the result of the first step (and only sample one). As previously analyzed, in most samples, the number of $o$ is greater than the number of $s$. If we predict $s$ first, sampling $s$ for the second step is more likely to be sufficient (because $s$ is scarce). Conversely, sampling $o$ would be harder to cover fully (because $o$ can be numerous). Readers will realize this more clearly as they continue reading.

Note 2:

Looking at recent arXiv papers, I found that conceptually, this extraction design is similar to the article:

"Entity-Relation Extraction as Multi-Turn Question Answering".

Now that we've explained the extraction logic—recognizing $s$ first, then passing $s$ to recognize $p$ and $o$ simultaneously—let's introduce the overall model structure.

To ensure efficiency, the model uses a CNN+Attention structure (plus a short-sequence LSTM; since the sequence is short, it doesn't hurt efficiency). It avoids slow pre-trained models like BERT. The CNN follows the previously introduced DGCNN, and the Attention used is the Self Attention promoted by Google. The overall structure is shown in the figure below.

Specifically, the processing flow of the model is:

1. Input character ID sequence, get corresponding character vectors via character-word mixed Embedding (detailed later), and add Position Embedding;

2. Input the "character-word-position Embedding" into 12 layers of DGCNN for encoding, obtaining an encoded sequence (denoted as $\boldsymbol{H}$);

3. Pass $\boldsymbol{H}$ through one layer of Self-Attention, then concatenate the output with prior features (optional, detailed later);

4. Pass the concatenated result through CNN and Dense layers, using a "semi-pointer-semi-annotation" structure to predict the start and end positions of $s$;

5. During training, randomly sample one labeled $s$ (during prediction, iterate through all predicted $s$). Pass the sub-sequence of $\boldsymbol{H}$ corresponding to this $s$ into a BiLSTM to get an encoding vector for $s$. Then add relative Position Embeddings to get a vector sequence of the same length as the input sequence;

6. Pass $\boldsymbol{H}$ through another layer of Self-Attention, then concatenate the result with the output of step 5 and prior features;

7. Pass the concatenated result through CNN and Dense layers. For each predicate $p$, construct a "semi-pointer-semi-annotation" structure to predict the start and end positions of the corresponding $o$. This predicts $o$ and $p$ simultaneously.

This model differs significantly from an early baseline model I open-sourced (https://github.com/bojone/kg-2019-baseline).

Additionally, regarding two possible doubts: first, "Why only sample one $s$ in step 5?" The answer is simple: one is enough (sampling multiple is equivalent to increasing batch size), and sampling one is easier to implement. Second, "Why not use BERT?" This question is actually quite boring; I simply didn't feel like using it. I have never been very fond of BERT and hadn't explored its fine-tuning until recently. Fine-tuning BERT is less interesting, less efficient, and doesn't reflect individual value as much. (I did try a BERT approach a few days before the deadline, which I might share in a later article.)

Having introduced the design thinking and overall structure, let's look at implementation details.

As mentioned, to minimize boundary errors, we use character-level labeling. However, pure character embeddings struggle to capture semantic information. A more effective way to incorporate semantics is "word-character mixed Embedding."

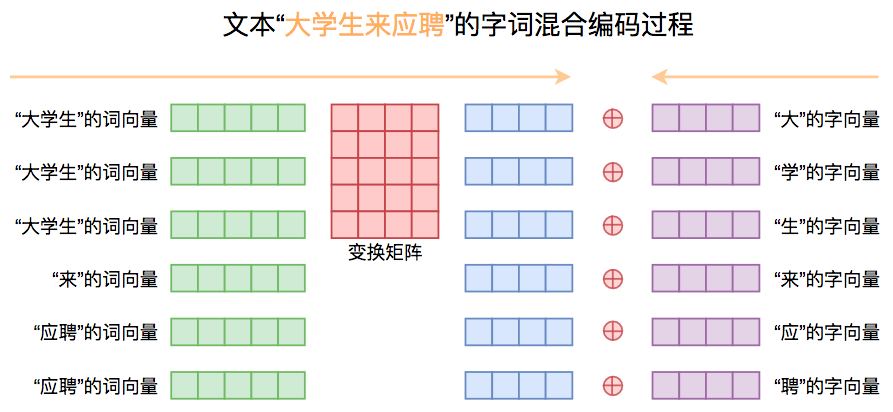

In this model, I used a self-designed word-character mixing method. First, the text sequence is input at the character level, and a character Embedding layer produces character vectors. Then, the text is segmented, and word vectors are extracted using a pre-trained Word2Vec model. To align word vectors with the character sequence, we repeat the word vector for each character in that word (number of repetitions = number of characters in the word). After obtaining the aligned word vector sequence, we transform the word vectors to the same dimension as the character vectors via a matrix and add them together. The process is illustrated below:

In practice, I used pyhanlp for segmentation and trained a Word2Vec model (Skip-Gram + Negative Sampling) on 10 million Baidu Baike entries. Character vectors were randomly initialized. During training, the Word2Vec vectors remained fixed, and only the transformation matrix and character vectors were optimized. From another perspective, this fine-tunes the Word2Vec vectors using character vectors and the transformation matrix. This way, we integrate prior semantic information from pre-trained word vectors while retaining character-level flexibility.

In my observation, this mixed approach improves the final result by about 1% to 2% compared to pure character vectors. This improvement is consistent across other tasks I've experimented with. Different pre-trained word vector models have some impact, but it's usually less than 0.5%. I also tried Tencent AI Lab word vectors (using the first 1 million words), with similar results.

Since the model mainly uses CNN+Attention, the encoded vector sequence lacks a strong "sense of position." For this competition's data, position information is valuable (e.g., $s$ often appears at the beginning, $o$ is often near $s$). An effective way to add position information is Position Embedding. Unlike formula-calculated Position Embeddings, this model uses optimizable Position Embeddings.

Specifically, I set a maximum length of 512, initialized a new all-zero Embedding layer (same dimension as character vectors). Position IDs are passed in to output Position Embeddings, which are added to the mixed word-character embeddings before being passed to the DGCNN.

Position Embedding is also used when encoding $s$. The sampled $s$ is encoded by BiLSTM into a fixed-size vector, which is then concatenated with the original encoded sequence as a condition for predicting $o$ and $p$. However, since $o$ is more likely to be near $s$, I didn't simply copy the vector; I added a "relative position vector" indicating the current position relative to $s$ (refer to the source code for details). This relative position vector shares the same Embedding layer as the input position embedding.

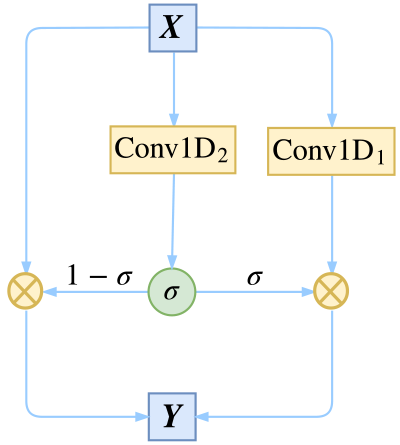

DGCNN was introduced in "DGCNN: A CNN-based Reading Comprehension QA Model". It is essentially "Dilated Gated Convolution." The concept of Gated Convolution comes from "Convolutional Sequence to Sequence Learning", where it's called GLU (Gated Linear Units). I replaced standard convolutions with dilated convolutions to increase the receptive field. Similar approaches appear in "Fast Reading Comprehension with ConvNets".

When input and output dimensions are the same, DGCNN can include residuals. I previously proved that DGCNN with residuals is mathematically equivalent to a dilated convolution in Highway form: \begin{equation}\begin{aligned}\boldsymbol{Y}=&\boldsymbol{X}\otimes \Big(1-\boldsymbol{\sigma}\Big) + \text{Conv1D}_1(\boldsymbol{X}) \otimes \boldsymbol{\sigma}\\ \boldsymbol{\sigma} =& \sigma\Big(\text{Conv1D}_2(\boldsymbol{X})\Big) \end{aligned}\end{equation} This model uses this form of DGCNN, representing selective multi-channel information transmission.

The final model used 12 layers of DGCNN, with dilation rates sequence $[1, 2, 5, 1, 2, 5, 1, 2, 5, 1, 1, 1]$. That is, $[1, 2, 5]$ is repeated three times (repeatedly learning from fine to coarse granularity), followed by three layers of $[1, 1, 1]$ (fine granularity fine-tuning).

External triplet knowledge bases were not allowed in the competition. However, we can integrate all triplets from the training set into a knowledge base. For a new sentence, we can perform distant supervision searches in this knowledge base to get candidate triplets. If two entities in a sentence happen to be the $s$ and $o$ of a triplet in the knowledge base, we extract that triplet as a candidate. This way, any sentence's candidate triplets can be extracted via pure retrieval. Note that these are only candidate triplets and could all be incorrect.

I used these distant supervision results as features. All $s$ obtained via distant supervision are formed into a 0/1 vector (similar to the labeling structure) and concatenated to the encoding sequence before predicting $s$. Similarly, all $o$ and corresponding $p$ from distant supervision are formed into a 0/1 vector and concatenated before predicting $o$ and $p$. In training, when constructing distant supervision features, the triplets of the current sample must be excluded (simulating test set conditions).

In terms of effect, adding distant supervision features improved the offline validation F1 by over 2%! This was checked carefully for "data leakage." However, surprisingly, there was almost no improvement on the online test set. Since the results with and without these features differed significantly, I eventually fused the results of both types of models.

The implementation includes additional auxiliary modules (represented by variables `pn1`, `pn2`, `pc`, `po` in the code). Theoretically, these provide "global information." `pn1` and `pn2` can be seen as global entity recognition modules, `pc` as a global relation detection module, and `po` as a global relation existence judgment. These modules are not trained separately but multiplied into the $s$ and $o$ prediction results.

These modules don't significantly affect the final F1 but help speed up training and feel more "reasonable" intuitively. Furthermore, when encoding $s$ after random sampling, $s$ is encoded via BiLSTM. In implementation, I uniformly interpolated the start and end IDs of $s$ to extract a fixed number (6) of vectors for the BiLSTM, avoiding the issue of variable-length $s$.

Finally, some details about the training process. Model code: https://github.com/bojone/kg-2019.

Test environment: Python 2.7 + Keras 2.2.4 + Tensorflow 1.8.

If this model helps your work, please cite it (not that I expect much):

@misc{

jianlin2019bdkgf,

title={A Hierarchical Relation Extraction Model with Pointer-Tagging Hybrid Structure},

author={Jianlin Su},

year={2019},

publisher={GitHub},

howpublished={\url{https://github.com/bojone/kg-2019}},

}

For the loss function, since the "semi-pointer-semi-annotation" consists of binary classifications, I used binary cross-entropy. Note that $s$ prediction has two binary classifications, while $o$ prediction (predicting $p$ as well) has $100 = 50 \times 2$ binary classifications. Their losses are still added in a 1:1 ratio. In other words, the absolute value of $o$'s loss is 50 times $s$'s loss. This might seem counter-intuitive (one might think $s$'s weight should be larger since if $s$ is wrong, the triple is wrong), but experiments showed $s$ and $o$ are equally important. Variants like Focal Loss did not help.

The model used the Adam optimizer. I first trained with a learning rate of $10^{-3}$ for up to 50 epochs, loaded the best result, and continued with $10^{-4}$ until convergence. The first epoch was used for WarmUp; otherwise, it might not converge.

To ensure stable improvement, EMA (Exponential Moving Average) was used with a decay rate of 0.9999.

The decoding process has significant room for tuning. The "semi-pointer-semi-annotation" structure uses two Sigmoid-activated outputs for the start and end of entities. A threshold is needed to identify an entity. Generally, 0.5 is used. However, I found that setting the "start" threshold to 0.5 and the "end" threshold to 0.4 yielded the best F1.

Furthermore, the test set for this competition was very high quality (very clean and standardized results). In contrast, the training set had more omissions. Thus, post-processing rules were used to standardize predictions according to official specifications. In the final days, many teams improved significantly, likely by finding rules to fix predictions. This added nearly 1% F1 for me, though it's more of a competition trick than academic research.

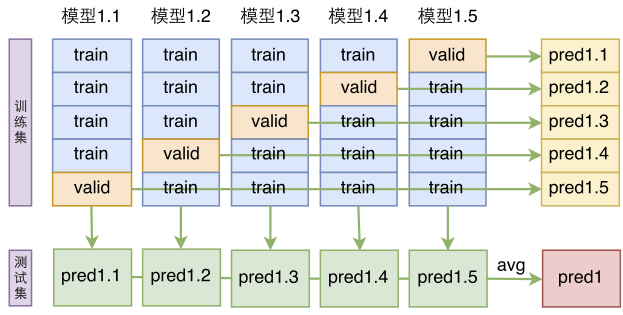

Ensembling is a powerful method. For this task, I used a layered ensemble scheme.

As mentioned, online results with and without distant supervision features were similar, but the prediction files varied. I took the union of the two types of models. Before taking the union, I used model averaging to improve single-model accuracy. Specifically, for models without distant supervision, I performed 8-fold cross-validation (shuffling and splitting data into 8 parts) to get 8 models. I did the same for models with distant supervision.

After getting these 16 models, I averaged the 8 models without distant supervision (averaging the output probabilities before decoding), and did the same for the 8 models with distant supervision. This resulted in two files, each with high precision due to averaging. Finally, I took the union of these two results.

Since the test set was perfect but the training set had omissions, I used a form of knowledge distillation to reorganize the training set. First, I used the 8 models (without distant supervision) obtained from cross-validation on the original training set to predict the training set itself. If a triplet appeared in all 8 predictions but not in the original training labels, it was added. If a triplet was in the training labels but didn't appear in any of the 8 predictions, it was removed.

After this cleaning, the training set was much more consistent. Re-training models on this corrected set yielded nearly 1% online improvement. While this might remove some difficult training samples, it focused the model on the primary "contradictions" (knowledge distillation).

"Efficiency" is a hallmark of this model. Even with the ensemble of 16 models, predicting the entire test set (~100k samples) took only 4 hours (could be reduced to 2 hours with a better CPU). In the competition groups, I heard many teams spent over ten hours or even several days for the final prediction.

Efficiency can be further improved by sacrificing a bit of precision. For example, the 12-layer DGCNN could be reduced to 6 layers with dilation sequence $[1, 2, 5, 1, 2, 5]$. Also, instead of BiLSTM, the vectors for the start and end of $s$ could be concatenated directly. Furthermore, reducing the Word2Vec vocabulary or using pure character embeddings could save more time.

With these changes, speed could increase by over 5 times, with a performance drop of only about 2% to 4%, depending on the trade-offs.

This article records my model design and "alchemy" experience in this competition. Before this, I knew almost nothing about Information Extraction or Knowledge Graphs, so I relied purely on intuition for model design and tuning (partly because mainstream models didn't satisfy me). I am quite satisfied with the final result and ranking. Of course, parts of the model were developed in isolation; if you find anything inappropriate, feel free to criticize. Even after this competition, I am still a novice in IE and KG, and I hope for guidance from seniors in the field.