By 苏剑林 | June 11, 2021

Last year, we released the SimBERT model, which has been one of our most successful open-source models, earning recognition from many readers. Simply put, SimBERT is a model that integrates generation and retrieval. it can be used as a relatively high baseline for sentence vectors and can also be used to automatically generate similar questions, serving as an auxiliary tool for data augmentation—a feature that was quite pioneering.

Recently, using RoFormer as the foundation, we further integrated and optimized SimBERT-related technologies and finally released the upgraded RoFormer-Sim model.

RoFormer-Sim is the upgraded version of SimBERT; we can also colloquially refer to it as "SimBERTv2," while "SimBERT" by default refers to the old version. Externally, aside from the base architecture switching to RoFormer, there is no obvious difference between RoFormer-Sim and SimBERT. In fact, their primary differences lie in the training details, which can be compared using two formulas:

$$ \text{SimBERT} = \text{BERT} + \text{UniLM} + \text{Contrastive Learning} $$ $$ \text{RoFormer-Sim} = \text{RoFormer} + \text{UniLM} + \text{Contrastive Learning} + \text{BART} + \text{Distillation} $$

In addition, RoFormer-Sim utilizes more training data and has been extended to general sentence patterns. This means that unlike SimBERT, which was limited to interrogative sentences (questions), RoFormer-Sim can be used for similar sentence generation for general sentences, broadening its application scenarios. Other training details include the use of larger batch sizes and max lengths in RoFormer-Sim, which we will introduce further below.

Open Source Address: https://github.com/ZhuiyiTechnology/roformer-sim

The key for both SimBERT and RoFormer-Sim lies in the construction of the training corpus. The training corpus for RoFormer-Sim consists of two parts: 1. Interrogative-type similar sentences; 2. General-type similar sentences. For interrogative similar sentences, we followed the SimBERT approach, collecting similar questions from Baidu Zhidao and further cleaning them via rules; this process is very mature for us. For general similar sentences, we did not have a ready-made source, so we proposed two schemes to construct (pseudo) similar sentence pairs in an unsupervised manner to some extent.

The first scheme is based on the idea that "answers to the same question are similar." If we have existing QA corpora where one question has multiple answers, we can segment each answer into sentences. Then, using an existing similarity function to compare the similarity between answers, we select sentence pairs with similarity exceeding a certain threshold as similar sentence pairs.

The second scheme is based on the idea that "sentences within the same passage are similar." This is even more simple and direct: we segment each passage into sentences and then calculate the similarity between all pairs using an existing similarity function, picking pairs that exceed a threshold. Obviously, the rationale for this scheme is weaker, so the threshold used is higher.

The "existing similarity function" mentioned here is a variant of the Jaccard similarity we used directly. In other words, we only needed a rule-based, character-level similarity. Semantic relevance is obtained through the internal associations within passages and the generalization capability of the pre-trained model itself. Through the first scheme, we constructed approximately 4.5 million (pseudo) similar sentence pairs from several reading comprehension datasets. Through the second scheme, we constructed approximately 4.7 million (pseudo) similar sentence pairs from over 30GB of parallel corpora. The crawled questions reached approximately 30 million similar question groups (one group can form multiple pairs). From this perspective, the number of questions far exceeds general sentences, so we sampled them at a 1:1 ratio to ensure a balanced sample for each sentence type.

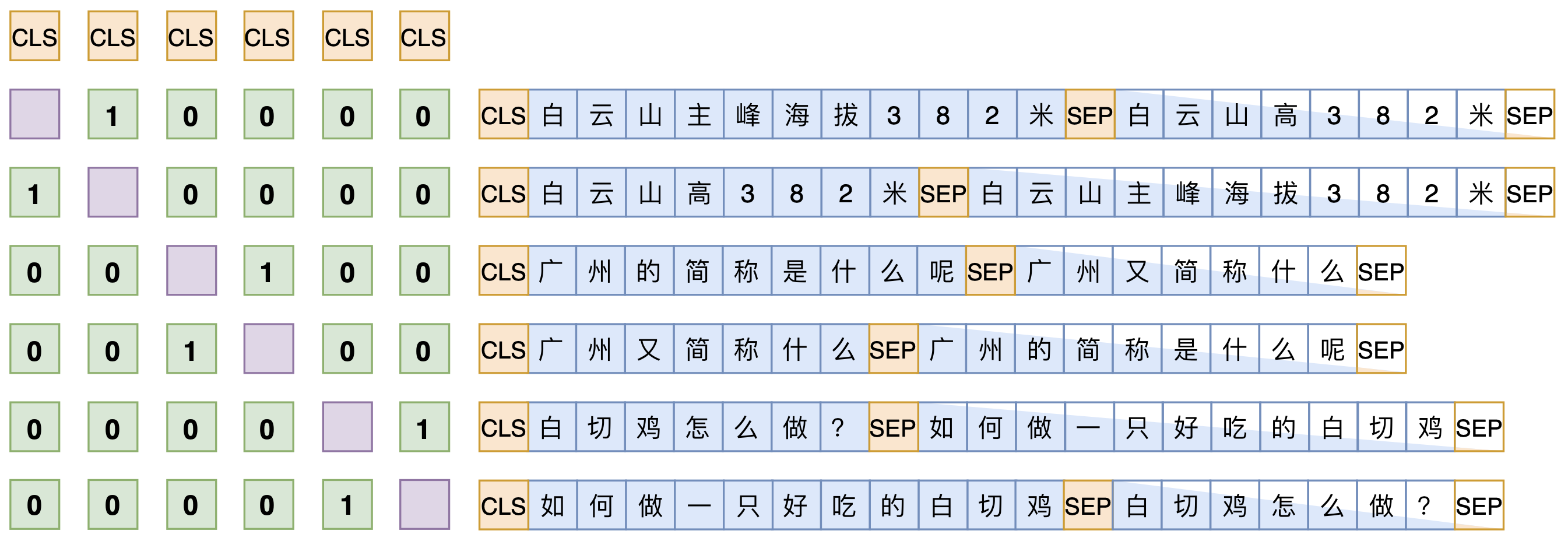

The training method for RoFormer-Sim is basically the same as SimBERT, as shown in the figure below. A slight difference is that to enhance the model's generation capability, during the construction of training data, we also randomly replaced some tokens of the input sentence with [MASK]. This pre-training method was first proposed by BART. Our difference from BART is: BART "inputs a noisy sentence and outputs the original sentence," whereas we "input a noisy sentence and output a similar sentence to the original one." Theoretically, our task is even more difficult.

Schematic diagram of SimBERT training method

There are no particularly good evaluation metrics for generation effects; we can just visually inspect some examples:

Overall, similar augmentation for arbitrary sentence structures has been preliminarily achieved, but the augmentation effect for questions is significantly better than for general sentence types. This is because the quality of the questions in the training corpus is significantly higher than that of general sentence types. Due to the BART-like training, in addition to direct similar sentence generation, we can also manually mask certain parts to let the model diverge and expand on its own, for example:

Please explore more ways to play with this on your own.

Adding general sentence corpora and introducing BART-like training are changes that relatively improved the performance of the generative model. However, we unexpectedly discovered that the performance of the retrieval model (i.e., the sentence encoding model) decreased. A likely reason is that while more corpora and greater noise increased the difficulty for the generative model, regarding contrastive learning, these diverse sentence structures or noisy samples acted as negative samples that were actually easier to distinguish. For example, if a batch contains both interrogative and declarative sentences, the model can easily identify many negative samples through sentence structure (rather than semantics), thereby reducing its ability to understand semantics.

Of course, the core positioning of SimBERT and RoFormer-Sim is as similar sentence augmentation models; the retrieval model is just a "by-product." However, we still hope this "by-product" can be as good as possible. To this end, after RoFormer-Sim training was completed, we further transferred the retrieval performance of SimBERT to RoFormer-Sim through distillation, making the retrieval performance of RoFormer-Sim basically equal to or even better than SimBERT. The distillation method is very simple: assuming for the same batch of sentences, the sentence vectors from SimBERT are $u_1, u_2, \cdots, u_n$, and the sentence vectors from RoFormer-Sim are $v_1, v_2, \cdots, v_n$, we then learn using the loss:

\begin{equation} L_{sim} = \frac{\lambda}{n^2} \sum_{i=1}^n \sum_{j=1}^n (\cos(u_i, u_j) - \cos(v_i, v_j))^2 \end{equation}

Here $\lambda=100$. Of course, to prevent the model from "forgetting" the generative model, the generation loss must be added during distillation, i.e., $L = L_{sim} + L_{gen}$. Distillation for the base version does not require many steps; it can be completed in roughly 5,000 steps.

Similar to "Which Unsupervised Semantic Similarity is Strongest? We Did a Comprehensive Evaluation," we used the same tasks to compare the retrieval effects of SimBERT and RoFormer (where the three data points in each cell represent "no whitening," "with whitening," and "with whitening-256" effects, same as the previous evaluation):

| ATEC | BQ | LCQMC | PAWSX | STS-B | |

|---|---|---|---|---|---|

| V1-P1 | 38.50/23.64/30.79 | 48.54/31.78/40.01 | 76.23/75.05/74.50 | 15.10/18.49/15.64 | 74.14/73.37/75.29 |

| V1-P2 | 38.93/27.06/30.79 | 49.93/35.38/40.14 | 75.56/73.45/74.39 | 14.52/18.51/15.74 | 73.18/73.43/75.12 |

| V1-P3 | 36.50/31.32/31.24 | 45.78/29.17/40.98 | 74.42/73.79/73.43 | 15.33/18.39/15.87 | 67.31/70.70/72.00 |

| V1-P4 | 33.53/29.04/28.78 | 45.28/34.70/39.00 | 73.20/71.22/72.09 | 14.16/17.32/14.39 | 66.98/70.55/71.43 |

| V2-P1 | 39.52/25.31/31.10 | 50.26/33.47/40.16 | 76.02/74.92/74.58 | 14.37/19.31/14.81 | 74.46/71.00/76.29 |

| V2-P2 | 39.71/32.60/30.89 | 50.80/37.62/40.12 | 75.83/73.45/74.52 | 13.87/19.50/14.88 | 73.47/74.56/76.40 |

| V2-P3 | 39.55/24.61/31.82 | 50.25/29.59/41.43 | 74.90/73.95/74.06 | 14.57/18.85/15.26 | 68.89/71.40/73.36 |

| V2-P4 | 36.02/29.71/29.61 | 48.22/35.02/39.52 | 73.76/71.19/72.68 | 13.60/16.67/13.86 | 68.39/71.04/72.43 |

As can be seen from the table, regardless of whether whitening is applied, RoFormer-Sim outperforms SimBERT on most tasks. This shows that RoFormer-Sim's retrieval performance can indeed be improved after distillation, making this "by-product" quite decent.

Using the same method, we also created a "small" version of RoFormer-Sim. In this case, distillation used the base version of RoFormer-Sim as the teacher model, but the number of distillation steps needed was higher (around 500,000). The final results are as follows:

| ATEC | BQ | LCQMC | PAWSX | STS-B | |

|---|---|---|---|---|---|

| V1small-P1 | 30.68/27.56/29.07 | 43.41/30.89/39.78 | 74.73/73.21/73.50 | 15.89/17.96/16.75 | 70.54/71.39/72.14 |

| V1small-P2 | 31.00/29.14/29.11 | 43.76/36.86/39.84 | 74.21/73.14/73.67 | 16.17/18.12/16.81 | 70.10/71.40/72.28 |

| V1small-P3 | 30.03/21.24/29.30 | 43.72/31.69/40.81 | 72.12/70.27/70.52 | 16.93/21.68/18.75 | 66.55/66.11/69.19 |

| V1small-P4 | 29.52/28.41/28.57 | 43.52/36.56/40.49 | 70.33/68.75/69.01 | 15.39/21.57/16.34 | 64.73/68.12/68.24 |

| V2small-P1 | 37.33/23.59/31.31 | 47.90/29.21/42.07 | 74.72/74.94/74.69 | 13.41/15.30/13.61 | 71.48/69.01/75.10 |

| V2small-P2 | 37.42/31.25/31.18 | 49.15/38.01/41.98 | 75.21/73.47/74.78 | 13.38/15.87/13.69 | 72.06/73.92/75.69 |

| V2small-P3 | 36.71/30.33/31.25 | 49.73/31.03/42.74 | 74.25/72.72/74.19 | 14.58/18.68/14.40 | 69.12/71.07/72.68 |

| V2small-P4 | 32.80/27.87/29.65 | 46.80/36.93/41.31 | 72.30/69.94/72.38 | 13.45/16.93/13.38 | 67.21/70.42/71.39 |

This article introduced and released our upgraded version of SimBERT—RoFormer-Sim (SimBERTv2). It can be used for both augmenting similar sentences and as a strong baseline for semantic similarity problems. Compared to SimBERT, its biggest feature is extending sentence patterns to general types, no longer limited to similar questions. We welcome readers to explore and share more ways to use it~