By 苏剑林 | September 10, 2021

Among the wide variety of pre-training task designs, NSP (Next Sentence Prediction) is generally considered to be one of the poorer ones. This is because it is relatively easy, and adding it to pre-training has not shown significant benefits for downstream fine-tuning. In fact, the RoBERTa paper showed that it can even have a negative impact. Consequently, subsequent pre-training efforts have generally taken one of two paths: either discarding the NSP task entirely, as RoBERTa did, or finding ways to increase its difficulty, as ALBERT did. In other words, NSP has long been something of an "outcast."

However, the tables have turned, and NSP may be about to make a "comeback." A recent paper, "NSP-BERT: A Prompt-based Zero-Shot Learner Through an Original Pre-training Task--Next Sentence Prediction" (hereafter referred to as NSP-BERT), shows that NSP can actually achieve very impressive Zero-Shot results! This is another classic case of Prompt-based Few/Zero-Shot learning, but this time, NSP is the protagonist.

Background Recap

We used to believe that pre-training was purely pre-training—it merely provided a better initialization for downstream task training. In BERT, the pre-training tasks are MLM (Masked Language Model) and NSP (Next Sentence Prediction). For a long time, researchers didn't focus on these two tasks themselves, but rather on how to fine-tune the model to achieve better performance on downstream tasks. Even when T5 scaled model parameters to 11 billion, it still followed the "pre-training + fine-tuning" route.

The first to forcefully break this mindset was GPT-3, released last year. It demonstrated that with a sufficiently large pre-trained model, we can design specific templates (Prompts) to achieve excellent Few/Zero-Shot results without any fine-tuning. Where there is GPT, BERT is never far behind. If GPT can do it, BERT should be able to as well. This led to the PET work, which similarly constructed special templates to utilize pre-trained MLM models for Few/Zero-Shot learning. Readers unfamiliar with this can refer to "Is GPT-3 Necessary? No, BERT's MLM Can Also Do Few-Shot Learning".

Since then, "pre-training + prompt" work has gradually increased and is now arguably "exploding." This series of research is now generally grouped under "Prompt-based Language Models." A quick search will yield many examples. By now, a consensus has formed: constructing an appropriate Prompt to bring the format of the downstream task closer to the pre-training task usually yields better results. Therefore, how to construct Prompts has become a key focus of this research, with P-tuning being a classic example (refer to "P-tuning: Automatically Constructing Templates to Release the Potential of Language Models").

NSP Enters the Fray

A careful look at Prompt-based research reveals that current content is primarily focused on how to better utilize pre-trained GPT, MLM, or Encoder-Decoder models, with very few paying attention to other pre-training tasks. NSP-BERT, however, fully taps into the potential of the NSP task and highlights that even within the "Prompt-based" framework, there is still significant room for exploratory thinking.

The so-called NSP task isn't actually about predicting the "next sentence" in a generative sense, but rather, given two sentences, determining whether they are adjacent. Accordingly, the idea behind NSP-BERT is simple: taking classification as an example, the input is treated as the first sentence, and then each candidate category is added to a specific Prompt to serve as the second sentence. The model then judges which second sentence is most coherent with the first. One can see that the logic of NSP-BERT is very similar to PET; in fact, all Prompt-based work is easy to understand—the difficulty lies in being the first to think of it.

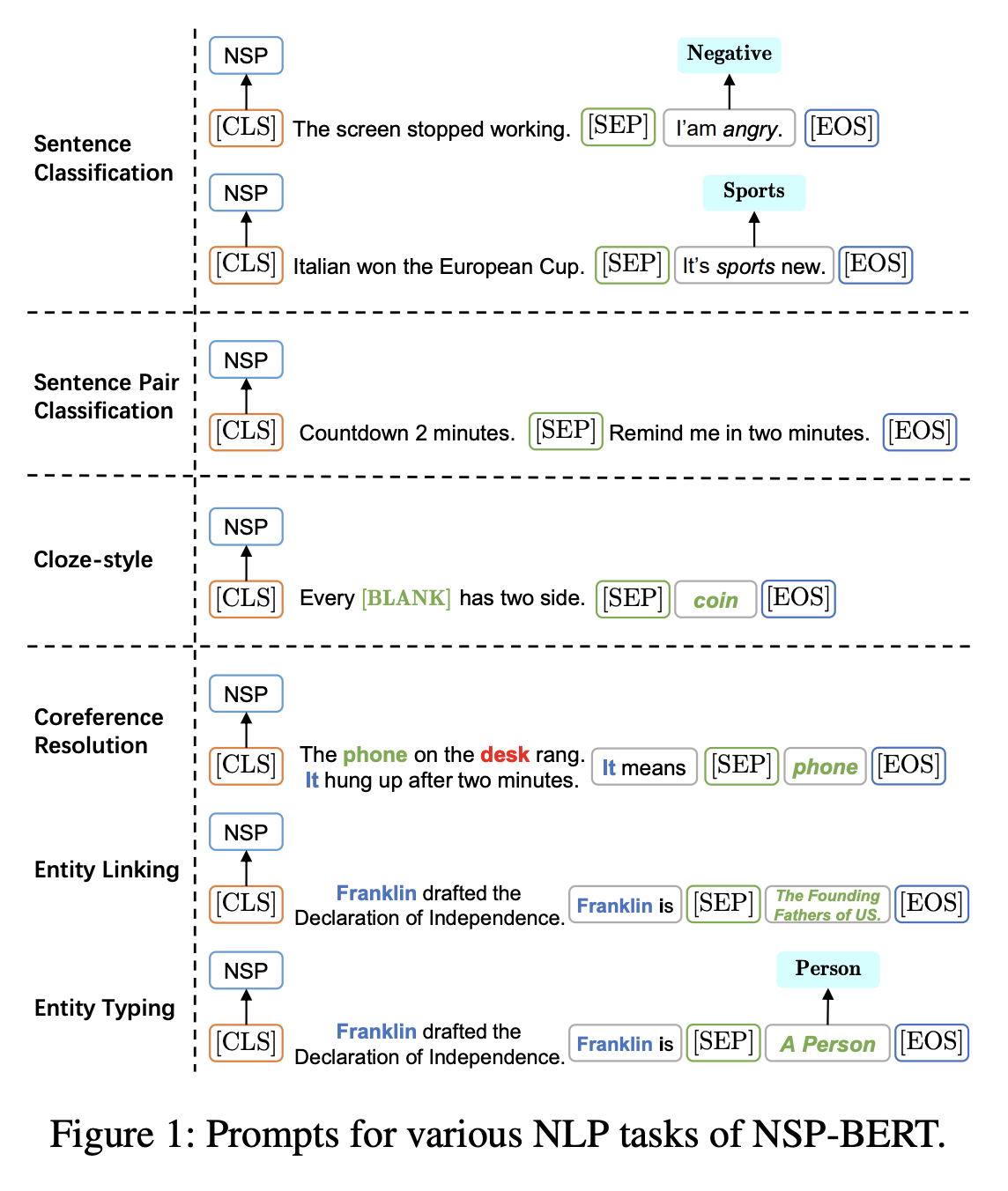

The diagram below demonstrates the Prompt schemes used by NSP-BERT for some common NLU tasks. It shows that NSP-BERT can handle a fair number of tasks:

NSP-BERT Prompts for common NLU tasks

In fact, once you've looked at this diagram, you have already understood most of the core ideas of NSP-BERT. The rest of the paper simply describes the details of this diagram. Students who wish to dive deeper can read the original paper carefully on their own.

Strictly speaking, this NSP-BERT mode is not appearing for the first time. Earlier, some proposed using NLI models as Zero-Shot classifiers (refer to "NLI Models as Zero-Shot Classifiers"). The format is essentially identical to NSP, but that required supervised fine-tuning on labeled NLI data. This is the first attempt at utilizing purely unsupervised NSP.

Experimental Results

Interestingly for us, NSP-BERT is a very "grounded" and honest piece of work. For instance, it was written by Chinese authors, its experimental tasks are in Chinese (FewCLUE and DuEL 2.0), and the code has been open-sourced. Here is the author's open-source address:

Github: https://github.com/sunyilgdx/NSP-BERT

Most importantly, the performance of NSP-BERT is genuinely good:

Zero-Shot results of NSP-BERT

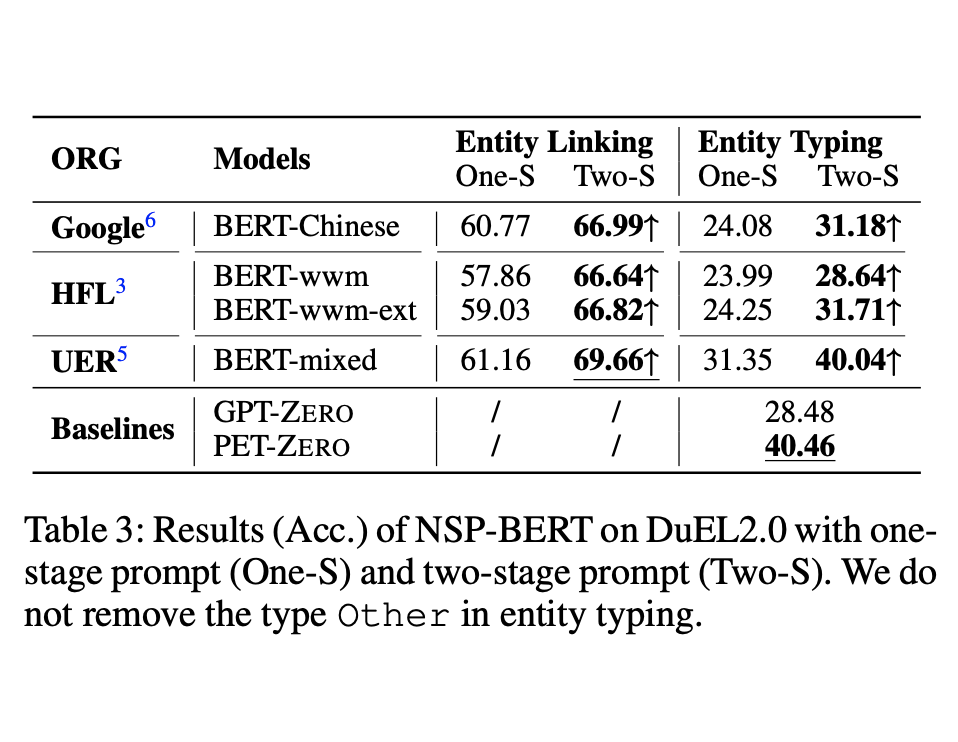

Results on Entity Linking tasks

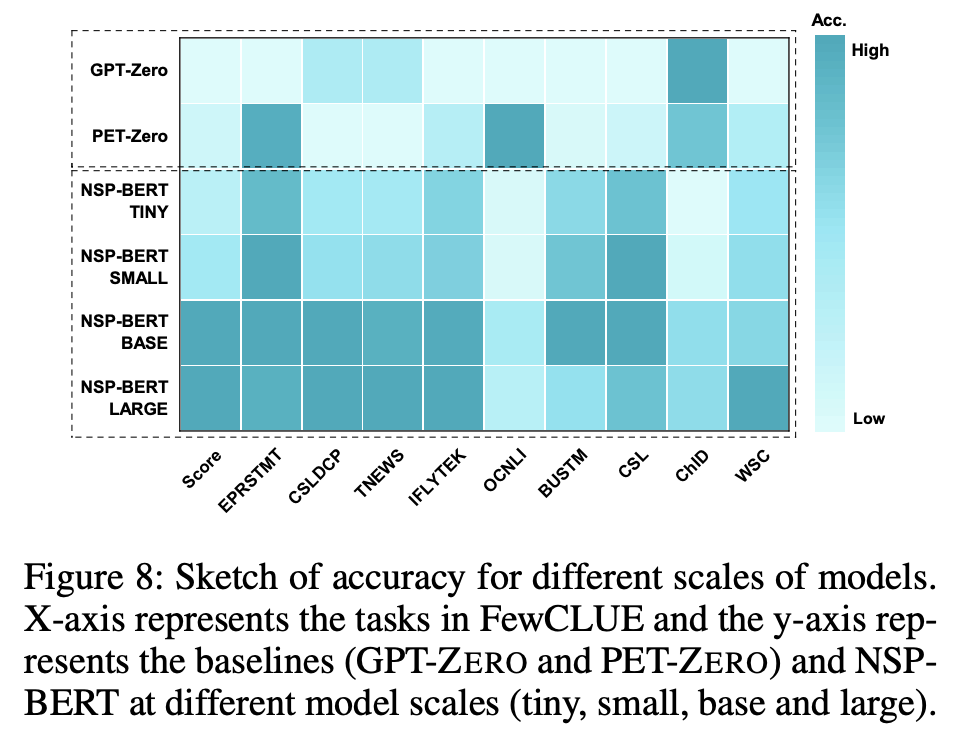

Impact of model scale on performance

Overall, after seeing these experimental results, I can only say "my apologies for underestimating you" to NSP. Such a powerhouse in the modeling world was right in front of us, but we never realized its potential. One must applaud the observational skills of the NSP-BERT authors.

Conclusion

This article shared a paper on using BERT’s pre-training task NSP for Zero-Shot learning. Results show that using NSP for Zero-Shot can achieve excellent performance. Perhaps in due time, NSP will truly "rise again."