Handling multiple similar tasks with Conditional LayerNorm

By 苏剑林 | April 16, 2021

Not long ago, I saw the "2021 Sohu Campus Text Matching Algorithm Competition" and found the problem quite interesting, so I gave it a try. However, since the competition itself is only for students, I cannot participate as an official contestant. Therefore, I am open-sourcing my approach as a baseline for everyone's reference.

Github Link: https://github.com/bojone/sohu2021-baseline

As the name suggests, the task is text matching—determining whether two texts are similar. This is normally a conventional task, but what's interesting here is that it is divided into multiple subtasks. Specifically, it is divided into two major categories, A and B. Category A has more relaxed matching standards, while Category B has stricter standards. Each major category is further divided into three sub-categories: "Short-Short Matching," "Short-Long Matching," and "Long-Long Matching." Therefore, although the task type is the same, strictly speaking, there are six different subtasks.

Generally speaking, completing this task would require at least two models, as the classification criteria for types A and B are different. If one wanted to be even more precise, one might even create six models. However, the problem is that training six models independently is often laborious, and the models cannot learn from each other to improve performance. Naturally, we should think about sharing a portion of the parameters to turn this into a multi-task learning problem.

Of course, viewing this as a standard multi-task learning problem might be too generic. Given the characteristics of these tasks—"same form, different standards"—I conceived a plan to use a single model for all six subtasks through Conditional LayerNorm.

Regarding Conditional LayerNorm, we previously introduced it in the article "Conditional Text Generation based on Conditional Layer Normalization." Although the example there was text generation, its applicable scenarios are not limited to that. Simply put, Conditional LayerNorm is a scheme for adding condition vectors into a Transformer to control output results; it incorporates the conditions into the $\beta$ and $\gamma$ of the LayerNorm layer.

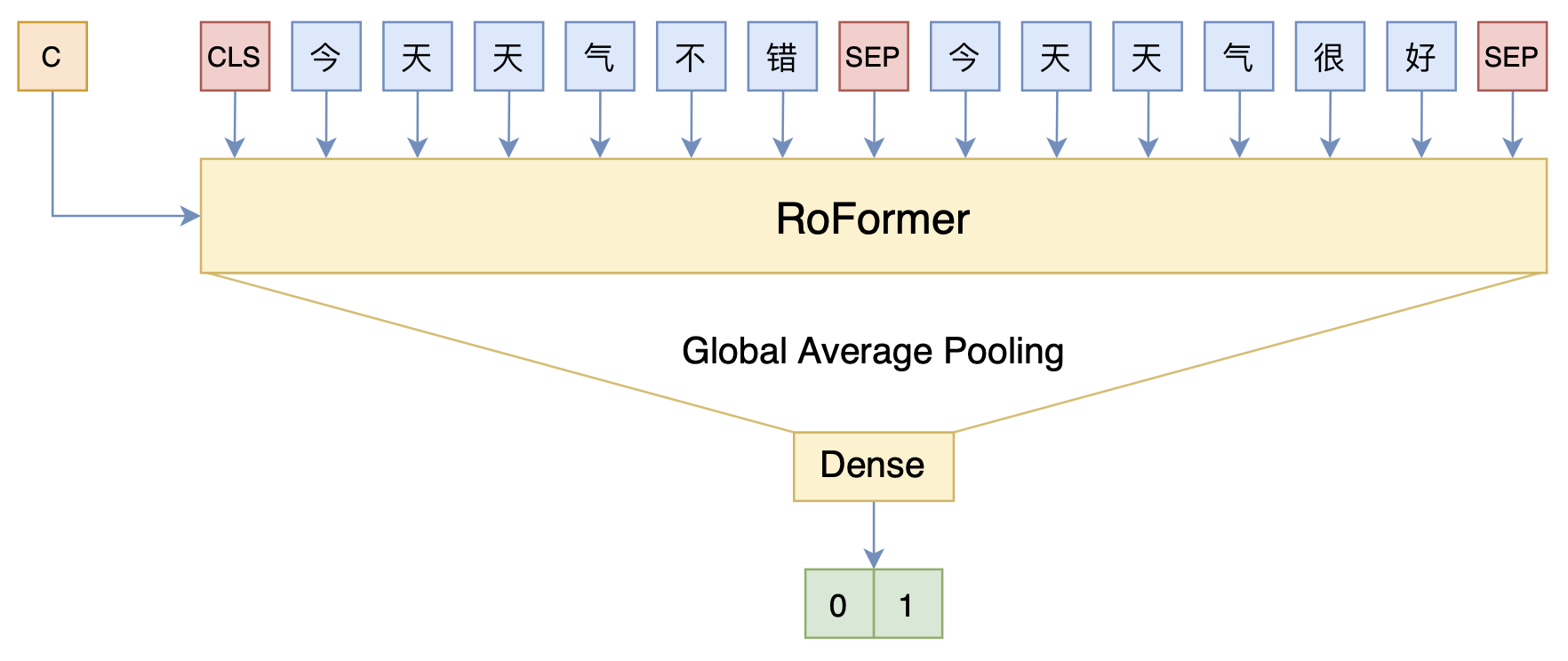

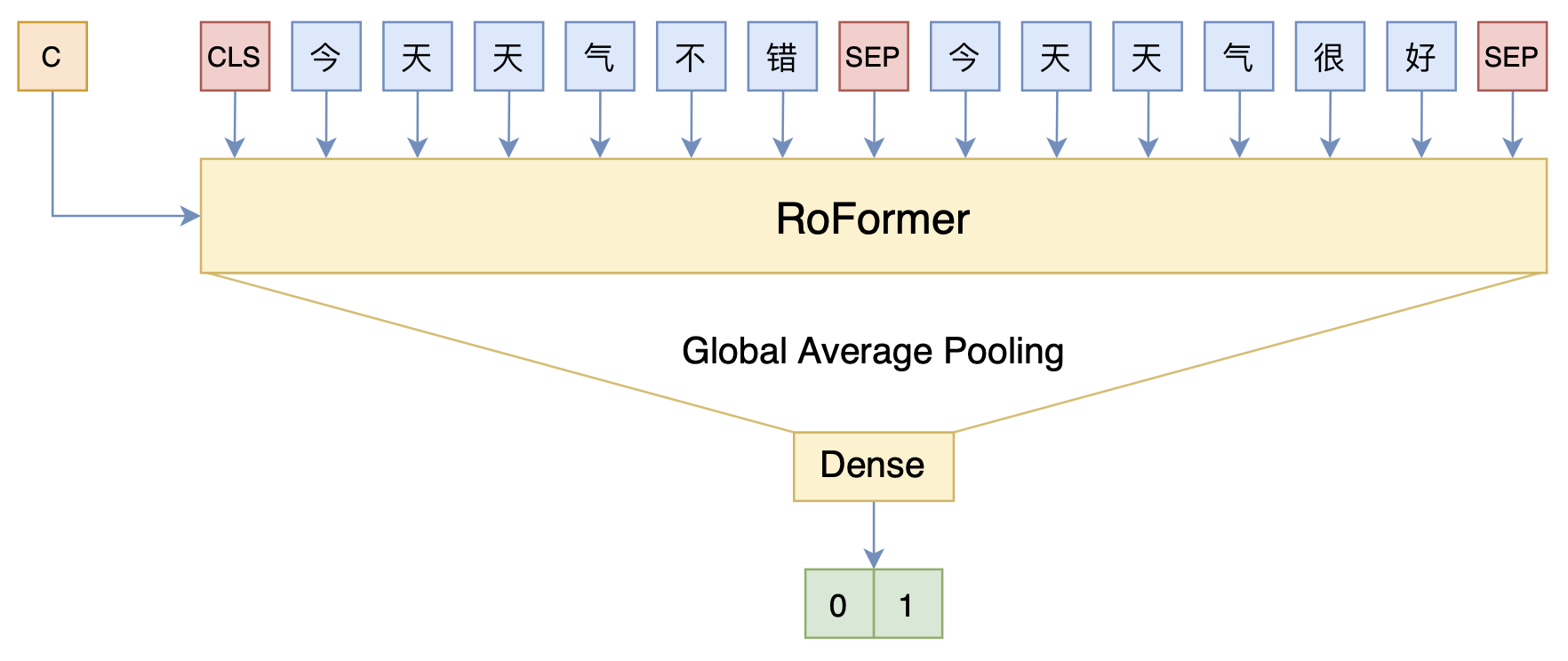

For the six tasks in this competition, we only need to pass the task type as a condition into the model. This allows a single model to handle six different tasks. The schematic diagram is as follows:

Handling multiple similar tasks with Conditional LayerNorm

In this way, the entire model is shared. We simply input the task type ID alongside the sentence input, achieving maximum parameter sharing.

The implementation of Conditional LayerNorm has long been integrated into bert4keras. Once this design was conceived, implementing it with bert4keras became a smooth process. Reference code is as follows:

Github Link: https://github.com/bojone/sohu2021-baseline

The code uses RoFormer as the base model. This is mainly because in "Long-Long Matching," the total length of the two concatenated texts can be very long. Using the token-based RoFormer can shorten the sequence length, allowing longer texts to be processed with the same computational power. Furthermore, the RoPE (Rotary Positional Embedding) used by RoFormer can theoretically handle sequences of any length. After testing the code a few times, the offline F1 score was around 0.74, and the online test set F1 score after submission was around 0.73. On a 3090, a single epoch takes about an hour, and 4 or 5 epochs are generally sufficient.

The current code mixes all the data together for random training. This has a small drawback: shorter sequence samples are padded to the maximum length, which slows down the training speed for short sequences (though it is certainly faster than training six models independently). An optimization that could be made is to ensure samples within a batch have similar lengths during batching, but I was too lazy to write that part—I'll leave that optimization to everyone else!

This article shares a baseline for the Sohu text matching competition. It primarily uses Conditional LayerNorm to increase model diversity, enabling a single model to process different types of data and generate different outputs.